Reciprocal-Attention

This is a Human-Robotic Interaction project, build for QTRobot (www.luxai.com) to increase perceived engagement and to measure attentiveness especially when engaging with children.

core application - engagement tracking for chilren

recently prototyped this project with Dr. Shruti Chandra (UNBC)

overview

in this project we worked on the QT robot's main selling point which is engagement, and to improve it we created an interactive eye mechanism that utilizes facial landmark detection (Computer Vision) that responds to your gaze, using this simple prototype we could:

measure what factors affect engagement

understand when and why children attention is lost

identify ways to improve interaction rates in HRI

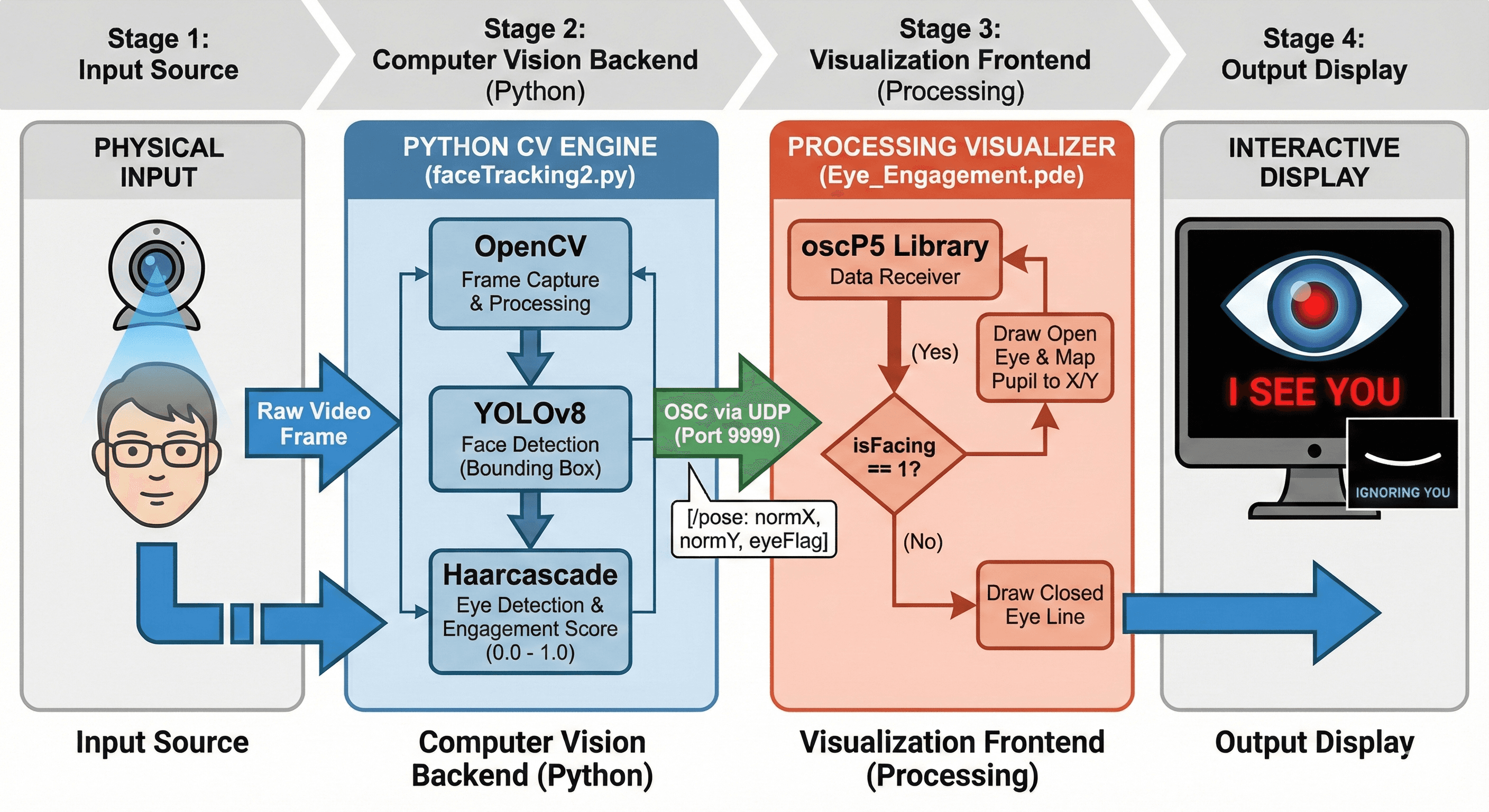

how it works

python tracks your face using computer vision

checks if you're making eye contact by detecting your eyes

sends the data via osc (open sound control) to a processing visualization

processing draws a digital eye that either:

tracks your movement (when you're looking at it)

closes and ignores you (when you look away)

tech stack

python 3 — face & eye detection

opencv — camera input and image processing

yolo v8 — face detection model

haarcascade — eye detection

osc (open sound control) — data transmission

processing — real-time visualization

core application - engagement tracking for chilren

recently prototyped this project with Dr. Shruti Chandra (UNBC)

overview

in this project we worked on the QT robot's main selling point which is engagement, and to improve it we created an interactive eye mechanism that utilizes facial landmark detection (Computer Vision) that responds to your gaze, using this simple prototype we could:

measure what factors affect engagement

understand when and why children attention is lost

identify ways to improve interaction rates in HRI

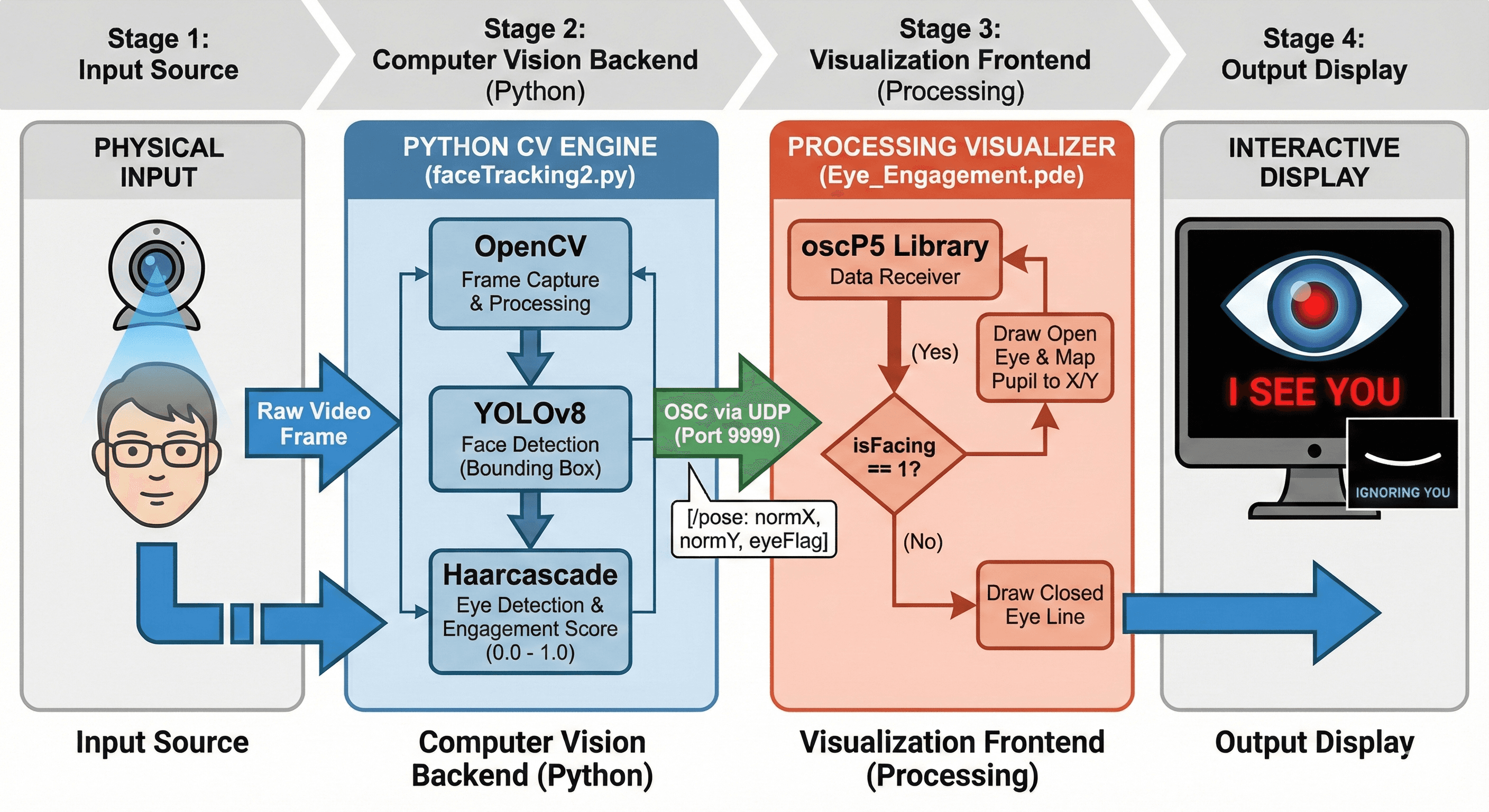

how it works

python tracks your face using computer vision

checks if you're making eye contact by detecting your eyes

sends the data via osc (open sound control) to a processing visualization

processing draws a digital eye that either:

tracks your movement (when you're looking at it)

closes and ignores you (when you look away)

tech stack

python 3 — face & eye detection

opencv — camera input and image processing

yolo v8 — face detection model

haarcascade — eye detection

osc (open sound control) — data transmission

processing — real-time visualization

core application - engagement tracking for chilren

recently prototyped this project with Dr. Shruti Chandra (UNBC)

overview

in this project we worked on the QT robot's main selling point which is engagement, and to improve it we created an interactive eye mechanism that utilizes facial landmark detection (Computer Vision) that responds to your gaze, using this simple prototype we could:

measure what factors affect engagement

understand when and why children attention is lost

identify ways to improve interaction rates in HRI

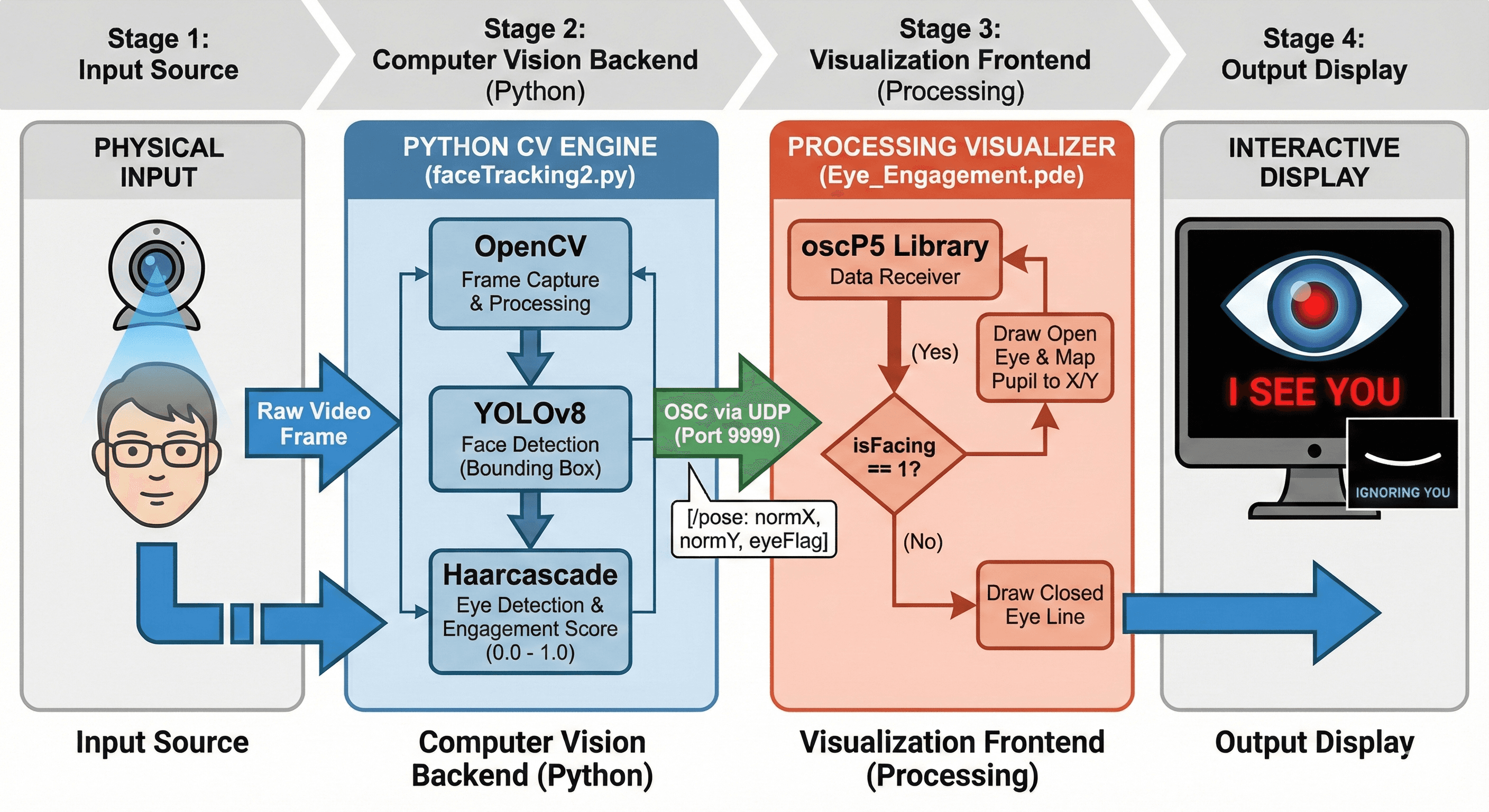

how it works

python tracks your face using computer vision

checks if you're making eye contact by detecting your eyes

sends the data via osc (open sound control) to a processing visualization

processing draws a digital eye that either:

tracks your movement (when you're looking at it)

closes and ignores you (when you look away)

tech stack

python 3 — face & eye detection

opencv — camera input and image processing

yolo v8 — face detection model

haarcascade — eye detection

osc (open sound control) — data transmission

processing — real-time visualization